1(6) 2009

|

|

|

ARCHITECTURE AND MODERN INFORMATION TECHNOLOGIES

ÌÅÆÄÓÍÀÐÎÄÍÛÉ ÝËÅÊÒÐÎÍÍÛÉ ÍÀÓ×ÍÎ-ÎÁÐÀÇÎÂÀÒÅËÜÍÛÉ ÆÓÐÍÀË ÏÎ ÍÀÓ×ÍÎ-ÒÅÕÍÈ×ÅÑÊÈÌ È Ó×ÅÁÍÎ-ÌÅÒÎÄÈ×ÅÑÊÈÌ ÀÑÏÅÊÒÀÌ ÑÎÂÐÅÌÅÍÍÎÃÎ ÀÐÕÈÒÅÊÒÓÐÍÎÃÎ ÎÁÐÀÇÎÂÀÍÈß È ÏÐÎÅÊÒÈÐÎÂÀÍÈß Ñ ÈÑÏÎËÜÇÎÂÀÍÈÅÌ ÂÈÄÅÎ È ÊÎÌÏÜÞÒÅÐÍÛÕ ÒÅÕÍÎËÎÃÈÉ

PUT ON YOUR GLASSES AND PRESS RIGHT MOUSE BUTTON.

AR-based user interaction using laser pointer tracking

Ch. Tonn 1, F. Petzold 1, D. Donath 2

1, 2 Bauhaus University Weimar, Germany

1http://uni-weimar.de/jpai | 2http://infar.architektur.uni-weimar.de

Designing and planning in existing built contexts

The use of computers in architectural practice is widespread and complements traditional tools such as drawings and physical models. Computers can support the design process in three dimensions with the help of a building information model (BIM), however, the output devices are still generally ‘traditional’ 2D devices, such as screens or plotters.

Rapid technological advances are however enhancing the possibilities of architectural design, for instance three-dimensional VR and AR environments. Immersive and semi-immersive projection displays, such as CAVEs™ and workbenches are already used to support virtual reality applications in many professional domains, including the field of architecture. The visualization of data using such displays requires dedicated rooms for setting up non-mobile screens, and allows one to interact with purely virtual information only.

The current software and hardware market in this field is characterized by a variety of individual products. Most of them are adaptations of CAAD-Systems or specific computer-supported solutions for new building or adaptations of products from other fields, e.g. VR/AR supported design in the automobile sector or SAR applications (Bimber and Raskar, 2005).

The Framework

Based upon the analysis and the identification of deficiencies, an IT concept was developed with the aim of providing a set of tools for the computer-assisted surveying and designing of buildings. The individual tools provide a continuous, evolutionary, flexible and dynamically variable system which address aspects ranging from the initial site visit to 1:1 designing on-site.

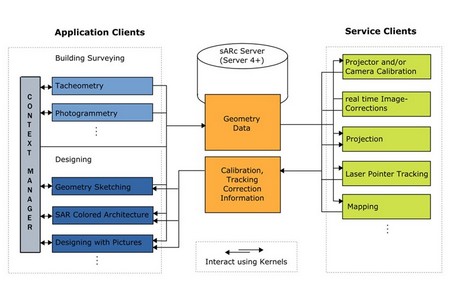

The concept of the system follows a modular principle. Different modules for information capture, design and planning can be combined as required. The individual modules form a continuous, extensible, flexible and in real-time dynamically adaptable system, which covers all aspects from the initial site visit to detail planning. Each tool was developed for an individual aspect taking into account its role and the requirements of the entire planning process. This concept (Fig. 1) was developed in an earlier research project “Collaborative Research Center 524” and adapted and expanded for this framework (Petzold and Donath, 2004; Donath, Petzold et al., 2001).

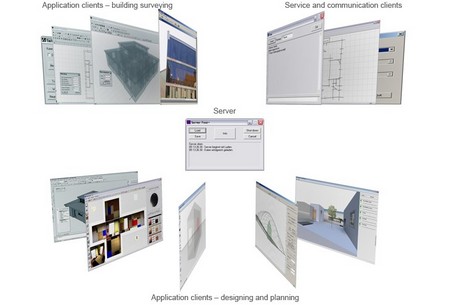

Selected aspects have been realised as prototypes on an experimental platform and demonstrate the system feasibility. The experimental platform consists of a server and extendable series of different tools in the form of clients (Fig. 2). The server is used to store data permanently and centrally and interacts with the various clients. Redundant data storage

within the clients reduces the level of continuous data-transfer load on the server (Petzold et al., 2008).

|

|

Fig. 1. Schematic diagram of the software framework |

Fig. 2. Examples of application clients and service clients |

The project “spatial augmented reality for architecture” is focussing onto one aspect of the research area “Planning and Building in Existing Built Environments” – the support of surveying and designing on site with and within existing buildings.

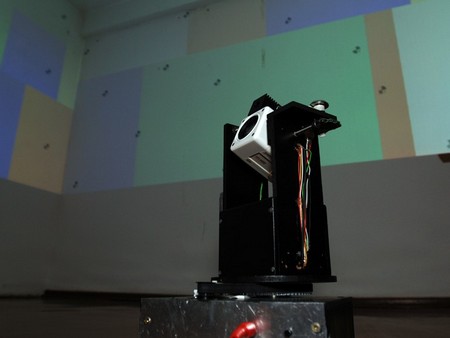

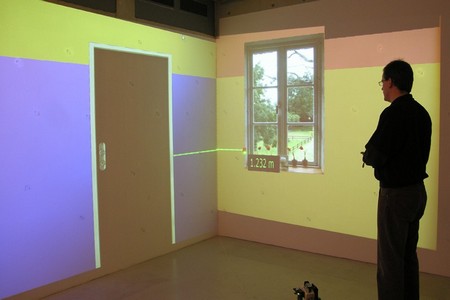

The input device

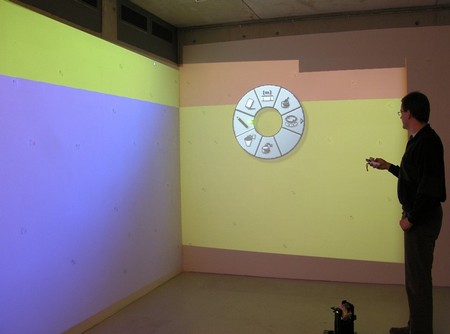

As part of the research project, we developed a laser-pointer tracking technique that utilises a Pan-Tilt-Zoom camera (Fig. 3), which enables the laser pointer to be used as an input device in architectural environments (Kurz et al., 2007). A zoom camera captures the bright point of the laser pointer on the surface of the building and its position in 3D-space then calculated from the dimensions of the room, which are known. If the point moves outside of the zoom-camera’s field of vision, its movement is either continued automatically or its position is determined using a second fish-eye context camera. In initial pilot testing using the laser-pointer tracking system, gestures were used to activate particular functions, for example a “time gesture”, where the point remains on the same spot for more than 3 seconds, was used to activate the distance measurement function at this point (Fig. 4). In practice this proved to be a little too imprecise – it’s difficult to hold still for so long. One solution is to add buttons to the laser pointer.

|

|

Fig. 3. Current PTZ camera prototype |

Fig. 4 Initial interaction on site |

For this we chose to use a Nintendo Wiimote®. In addition to several buttons, this device also has a movement sensor, an IR-camera and Bluetooth interface. In addition, WiiUse, the freely available C++ control library (Michael Laforest 2007, http://www.wiiuse.net/), made it possible to instantly use the devices features for our own purposes. The wireless Bluetooth connection also makes it possible to move around freely within a radius of approximately 10 metres, which is sufficient for our purposes. A further client was developed and integrated into the software framework that allows other application clients to access the Wiimote® functionality. In the end it proved easier to dismantle the laser pointer and add this to the Wiimote® rather than adding buttons to the laser pointer (Fig. 5, Fig. 6).

|

|

Fig. 5. Wiimote® and the laser pointer attachmet |

Fig. 6. The complete input device |

The on-site interaction concept

Working directly on site with the building substance around one is in certain respects fundamentally different to working at a desk and screen. When working on a CAAD-model on a 2D-display, one has an on-screen user interface with menu bar, toolbars and palettes, and one or more views of the CAAD model. Unlike the screen and desktop, when working within a building, the display surfaces are themselves a section of the architecture itself, which can also have a complex surface geometry. There is no frame around the screen, and the Start-Menu in the bottom left corner disappears among the overlay of projections.

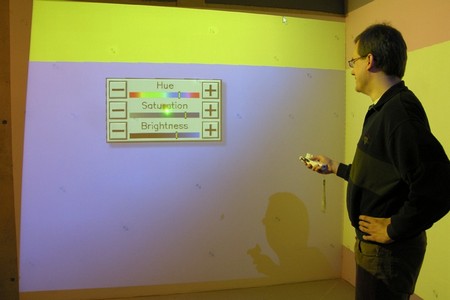

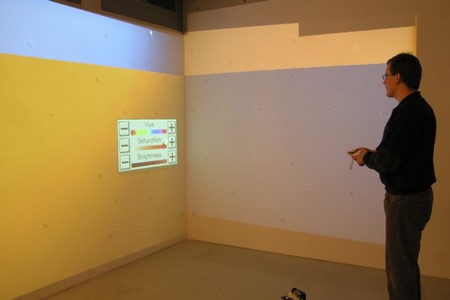

Working on site also places new demands on the presentation quality and on interaction. When one works at a scale of 1:1, one also wants to experience the design, colour and materials of the space at true scale. Permanent menus and user interface destroy this illusion. Other forms of interaction such as gesture-recognition, speech input and context menus interfere less with the projection of the information.

Pie-menus

One possible approach to keeping the projected area free of distracting widgets is to use contextual pie-menus that appear as required. Context pie-menus are already familiar as they are used for browser plug-ins and in various operating systems. The available options and tools are arranged as icons around a circle centred on the dot of the laser beam (Fig. 7). By moving the laser pointer towards a certain point on the circle, the appropriate option is selected or a sub-menu pops up. With the help of text descriptions for the icons (Fig. 8), the designer quickly gets used to using the laser pointer to select a certain option. In this way, selecting a tool also helps the user to establish a laser pointer gesture.

|

|

Fig. 7. Pie-menu in use on site |

Fig. 8. Desktop view of the pie-menu with additional text descriptions |

According to Callahan et al. (1988), pie-menus are easier to use than rectangular linear context menus, as each option is the same distance away from the centre. One need only move the pointer into the right pie-section of the circle to select the function.

Application in the Software Framework

An “InteractionKernel” was implemented as a software library that translates the various input data from the input device (laser-pointer tracker, Wiimote® or mouse) and displays the necessary UI element in the 3D CAAD model. All application clients in the software framework can use these libraries to enable them to be controlled remotely. The “InteractionKernel” also controls which application client (e.g. “Colored Architecture” or “Designing with Images”) currently has input focus in the network. The “InteractionKernel” contains a central pie-menu from which all functions can be started that is accessed from the middle mouse button or the Wiimote® “Home” button. This menu provides access to all functions in the application client and the option to switch to another application client. The pie-menu also serves to activate the interaction modes, the cursor symbol denoting the currently active function. The mouse or Wiimote® button applies the function. The following section describes a selection of possible on-site planning applications.

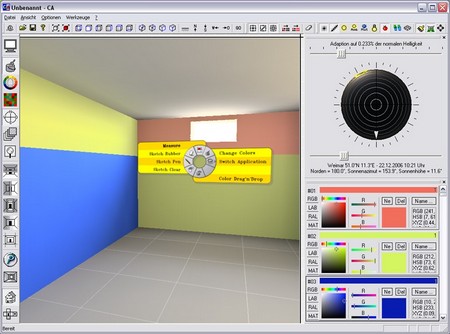

Colored Architecture

The software prototype “Colored Architecture” (CA) was developed to support the design process for colours and materials (Tonn 2005 and Tonn 2008). The tool addresses the deficits of digital colour and material design and supports digital planning with colour and materials from the initial design, through the planning phase to specification. The digital support of colour and material design in CA uses and adapts existing strategies, instruments and representations, e.g. alternative variants, colour studies and colour relationships such as harmonies and contrasts. To reliably assess and evaluate the results of colour and material choices, integrated radiosity visualisation is employed as it is able to represent interactions between different surfaces such as reflections.

The following examples show work undertaken in the windowless ARVis-Lab at the Bauhaus-Universität Weimar. The hardware setup consists of two wide-angle video projectors mounted on the ceiling (Fig. 9), the Pan-Tilt-Zoom camera (Fig. 1), a computer that drives the projectors and a mobile laptop on which the application clients run. The pie-menu can be used to start the different functions of the “Colored Architecture” software prototype (Fig. 7). In addition to a drawing function that can be used, for example, to trace elements on a wall such as a crack or damages (Fig. 10), and a measuring tool (Fig. 14, Fig. 15), a number of colour design functions are available. By way of example, a colour picker context menu has been implemented in order to be able designate the colour of surfaces or areas thereof on site at true scale (Fig. 11, Fig. 12).

|

|

Fig. 9. Hardware setup in the ARVis-Lab |

Fig. 10. Tracing the line of a crack in the building fabric |

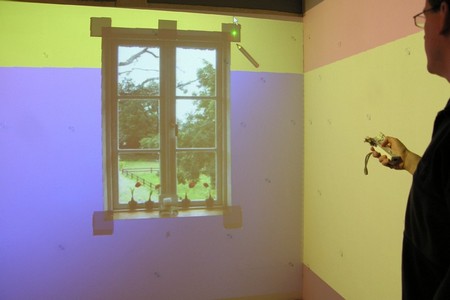

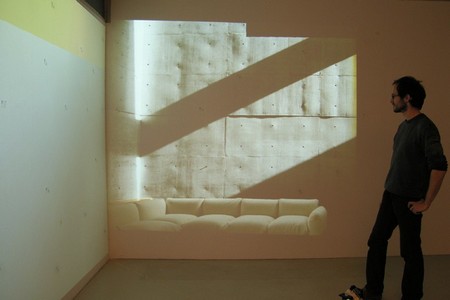

Designing with Images

The “Designing with Images” software prototype allows one to capture optical impressions and to work with them in the design process (Schneider et al., 2007). Typical approaches to designing with images in this way are most well-known from image editing programs for the creation of photomontages. These are currently limited to two dimensions, but by extending the same principle into the third dimension, collages can be experienced spatially. Planning with images and photos allows the architect to compose photo-realistic spaces in the early phases of the design process and is especially useful for interior design, where photorealism is particularly desirable (Fig. 16).

Fig. 14. Checking the new width of the window

|

|

Fig. 15. Check the door and window design |

Fig. 16. Experience of the spatial impression |

The images can be inserted into the scene via drag and drop and adapt their position to the surfaces of the model. In a similar manner to traditional image editing programs it is possible to resize and rotate these images (Fig. 13). Where images are laid on top of other images they stack making it possible to design in layers. Furthermore it is possible to replace images, which makes it easy to rapidly compare different variants.

Conclusion and outlook

In this paper we have presented an augmented-reality based user interaction method that uses laser-pointer-tracking and is suitable for use on site. In addition to an “Interaction Kernel” for use in the distributed software framework, we have examined the use of pie-menus in different examples as a means of controlling and selecting functions. The integration of speech control for adding text is also envisaged. Similarly, an IR-marker tracking method is planned so that the position of the viewer can be determined for a 3D stereo projection.

A further aim of the research project is the development and integration of a motorised Pan-Tilt-Projector into the software framework. Through the use of a mobile projector, it should be possible to partially augment an entire room. The projection is then continually adapted to the rotation angle of the projector. A camera attached to the Pan-Tilt-Projector can follow the position of the laser dot of the input device so that through the shifting projection surfaces, the entire design of the room can be experienced.

|

|

Fig. 17. Discussing a design |

Fig. 18. The actual location in the ARVis-Lab |

Augmented reality technology opens up great potential for supporting the architectural design process. The ability to make and present design decisions at true scale on site “for real” adds an entirely new dimension to architectural design (see figs. 17-18). The long-term aim of the research project is to take the practice of architectural design to a new level.

References

1. Bimber, O. and Raskar, R.: 2005, Spatial Augmented Reality - Merging Real and Virtual Worlds, A K Peters Ltd., Wellesley / USA.

2. Callahan, J., Hopkins, D., Weiser, M. and Shneiderman, B.: 1988, An empirical comparison of pie vs. linear menus, in: J. J. O'Hare, Ed., Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, ACM, New York, NY, 95-100.

3. Hommerich, C., Hommerich, N. and Riedel, F.: 2005, Zukunft der Architeken – Berufsbild und Märkte. Eine Untersuchung im Auftrag der Architektenkammer Nordrhein-Westfalen, available at:www.bakcms.de/bak/datendakten/architektenbefragungen/Zukunft_der_Architekten_Endbericht.pdf: Jan 2006.

4. Kurz, D., Häntsch, F., Grosse, M., Schiewe, A. and Bimber, O.: 2007, Laser Pointer Tracking in Projector-Augmented Architectural Environments, in: IEEE International Symposium on Mixed and Augmented Reality (ISMAR'07), Nara, Japan , pp. 19-26.

5. Petzold, F., Thurow, T. and Donath, D.: 2001, Geometrieerfassung und -Abbildung in der Bestandsaufnahme, in Richard Romberg, Manuel Schulz (ed.), Forum Bauinformatik 2001, VDI Verlag Düsseldorf, pp. 260-267.

6. Petzold, F. and Donath, D.: 2004, The Building as a Container of Information - the Starting Point for Project Development and Design Formulation, in Proceedings of the ICCCBE-X, Weimar, Germany, pp. 164-165.

7. Petzold, F. and Donath, D.: 2005, Digital Building Surveying and Planning in Existing Buildings - Capturing and Structuring Survey Information, in A. Amir (ed.), Proceedings of ASCAAD 1st International Conference, Dhahran, Saudi-Arabia, pp. 73-87.

8. Petzold, F., Tonn, C. and Schneider, S.: 2008, Scale 1:1 – Design Support Site With and Within Existing Build Context, in AMIT 2008 2(3), available at http://www.marhi.ru/AMIT/2008/2kvart08

/Frank_Petzold/article.php

9. Schneider, S., Tonn, Ch. and Petzold, F.: 2007, Designing with images – Augmented reality supported on-site Trompe-l'œil, in: Em´boby´ing Virtual Architecture – ASCAAD, Alexandria, Egypt, pp. 275-290.

10. Tonn, Ch.: 2005, Computergestütztes dreidimensionales Farb-, Material- und Lichtentwurfswerkzeug für die Entwurfsplanung in der Architektur, Diploma Thesis, Bauhaus-Universität Weimar.

11. Tonn, Ch.: 2008, Colored Architecture, Software Prototype, Chair for Computer Science in Architecture, Bauhaus-Universität Weimar.